DARPA’s ‘Cortical Modem’ Will Plug Straight Into Your BRAIN

By Darren Pauli | The Register

The Defense Advanced Research Projects Agency (DARPA) is developing a brain interface it hopes could inject images directly into the visual cortex.

News of the “Cortical Modem” project has emerged in transhumanist magazine Humanity Plus, which reports the agency is working on a direct neural interface (DNI) chip that could be used for human enhancement and motor-function repair.

Project head Dr Phillip Alvelda, Biological Technologies chief with the agency, told the Biology Is Technology conference in Silicon Valley last week the project had a short term goal of building a US$10 device the size of two stacked nickels that could deliver images without the need for glasses or similar technology.

The project was built on research by Dr Karl Deisseroth whose work in the field of neuroscience describes how brain circuits create behaviour patterns.

Specifically the work dealt in Deisseroth’s field of Optogenetics, where proteins from algae could be inserted into neurons to be subsequently controlled with pulses of light.

“The short term goal of the project is the development of a device about the size of two stacked nickels with a cost of goods on the order of $10 which would enable a simple visual display via a direct interface to the visual cortex with the visual fidelity of something like an early LED digital clock,” the publication reported.

“The implications of this project are astounding.”

The seemingly dreamy research was limited to animal studies, specifically the real time imaging of a zebra fish brain with some 85,000 neurons, due to the need to mess with neuron DNA and the ‘crude device’ would be a long way off high fidelity augmented reality, the site reported.

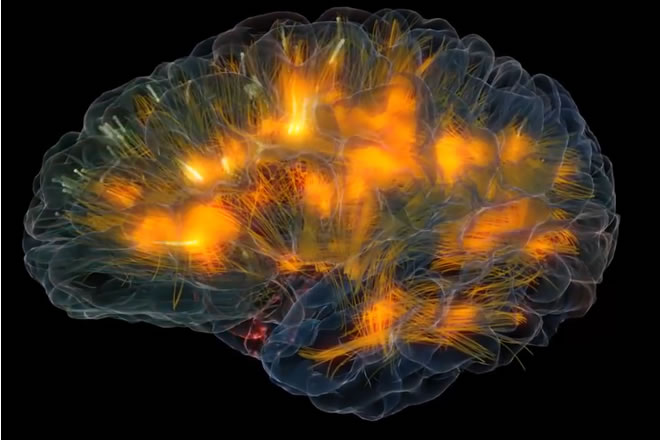

Video: Glass brain flythrough – Gazzaleylab / SCCN / Neuroscapelab

This is an anatomically-realistic 3D brain visualization depicting real-time source-localized activity (power and “effective” connectivity) from EEG (electroencephalographic) signals. Each color represents source power and connectivity in a different frequency band (theta, alpha, beta, gamma) and the golden lines are white matter anatomical fiber tracts. Estimated information transfer between brain regions is visualized as pulses of light flowing along the fiber tracts connecting the regions.

The modeling pipeline includes MRI (Magnetic Resonance Imaging) brain scanning to generate a high-resolution 3D model of an individual’s brain, skull, and scalp tissue, DTI (Diffusion Tensor Imaging) for reconstructing white matter tracts, and BCILAB (http://sccn.ucsd.edu/wiki/BCILAB) / SIFT (http://sccn.ucsd.edu/wiki/SIFT) to remove artifacts and statistically reconstruct the locations and dynamics (amplitude and multivariate Granger-causal (http://www.scholarpedia.org/article/G…) interactions) of multiple sources of activity inside the brain from signals measured at electrodes on the scalp (in this demo, a 64-channel “wet” mobile system by Cognionics/BrainVision (http://www.cognionics.com)).

The final visualization is done in Unity and allows the user to fly around and through the brain with a gamepad while seeing real-time live brain activity from someone wearing an EEG cap.

Team:

– Gazzaley Lab / Neuroscape lab, UCSF: Adam Gazzaley, Roger Anguera, Rajat Jain, David Ziegler, John Fesenko, Morgan Hough

– Swartz Center for Computational Neuroscience, UCSD: Tim Mullen & Christian Kothe

http://neuroscapelab.com/projects/glass-brain/

http://gazzaleylab.ucsf.edu/

http://sccn.ucsd.edu/